artificial intelligence and urban planning | thinkthinkthink #26

can AI enable the deconstruction of complex systems

This issue on artificial intelligence and planning is 978 words long and it takes ~3.5 minutes to read. I hope you enjoy it.

Static urban planning is dead. It ignores complexity, cherry-picks data, and generates urban fabric that cannot adapt. Even in governance systems with data-driven decision making, at best, the context is too narrow to cover the breadth of information needed to account for all the complexity of an urban system. The solution is the living, adaptive city DNA. DNA is not a single master plan; it is an optimized, conditional rule set that expresses differently across various tissues and contexts. The future of planning should be designed to encode this same logic, shifting urban strategy from static documents to continuously tended, conditional rule sets that respond instantly to local signals and changing conditions. This can now conceivably be built with heavy assistance from an LLM, spatial vector embeddings, a graph database and blockchain-based governance.

1. The Genotype: Deconstructing the City’s Logic

First, we need a rigorous deconstruction of the city into its core primitives, translated into a computable representation. I previously called this the Urban Knowledge Graph but struggled to imagine how one could generate it. This is where LLMs provide a critical new capacity. They enable methodical synthetization of vast, unstructured knowledge - the “what” of the city buried in decades of research, policy papers, and case studies from across the globe.

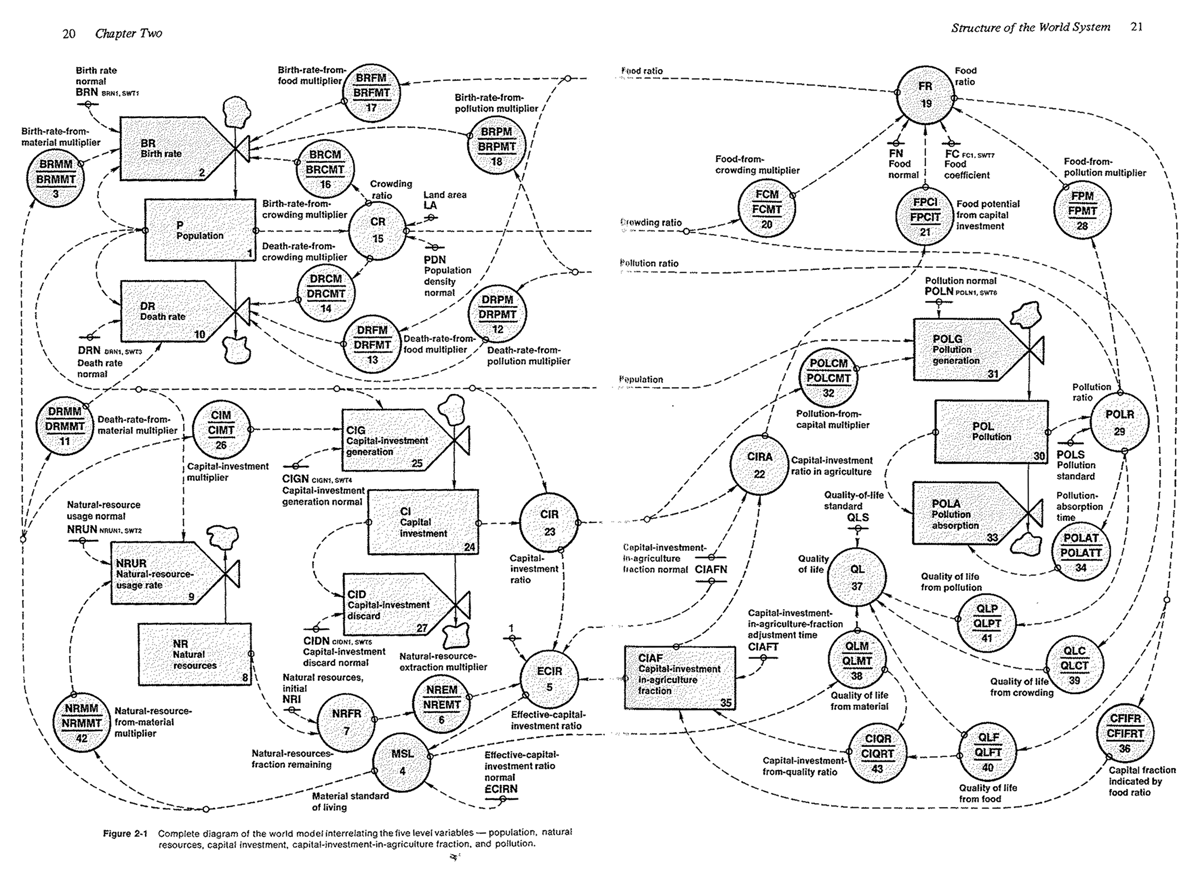

The concept isn’t new; Lowry’s Model of Metropolis (1964) and Forrester’s Urban Dynamics (1969) were among the earliest attempts to capture the city as a formal system, each translating complex urban processes into structured, computable forms. Their models demonstrated that cities could be represented through interacting stocks, flows, and behavioral rules. Now, work from Batty, Bettencourt, and Bertaud confirms the city’s structure is governed by legible, decomposable primitives. The new addition are Large Language Models, which provide the missing piece to the puzzle.

Recent advances in data computation and ingestion now enable these LLMs to act as tireless analytical engines. They can extract key entities, relationships, and causal narratives from extensive corpora of information. More importantly, they can identify hidden patterns, non-linear correlations, and feedback loops that might elude conventional analysis.

When applied to urban research, these methods can surface the canonical variables that drive performance - from land markets and network connectivity to fiscal transfers and institutional constraints. The output can then be rendered as a complex system dynamics structure, with adaptive stocks, flows, and feedbacks that build on the lineage of Urban Dynamics.

This deconstruction must produce a living diagram of interdependence, not a decorative map.

Nodes represent operative constructs: floor area ratio, commuting time budgets, parcel-level viability, social added value and tens of thousands of other variables that drive an urban system.

Edges encode empirically grounded relationships - positive or balancing - with sensitivity ranges and relevant delays.

This knowledge graph can ingest new evidence and recalibrate as new studies are indexed. Scenario questions then become structured interventions: What happens if we reduce travel cost on a corridor? The model returns directional effects with uncertainty bounds and, crucially, cites the evidence underpinning each link, ensuring auditability.

2. The Phenotype: Grounding Policy in Place

The knowledge graph encodes the rules, but the geospatial embeddings determine the context—the soil in which the rules grow. A traditional map says ‘pavement.’ An embedding says: ‘pavement, surrounded by 30-year-old oak trees, 15% annual humidity change, two schools, a bike lane and a 95th percentile noise level.’ This gives us place-based priors. This enables the system to retrieve analogous urban fragments - places with similar signatures - to condition how the knowledge graph’s rule-set is expressed locally.

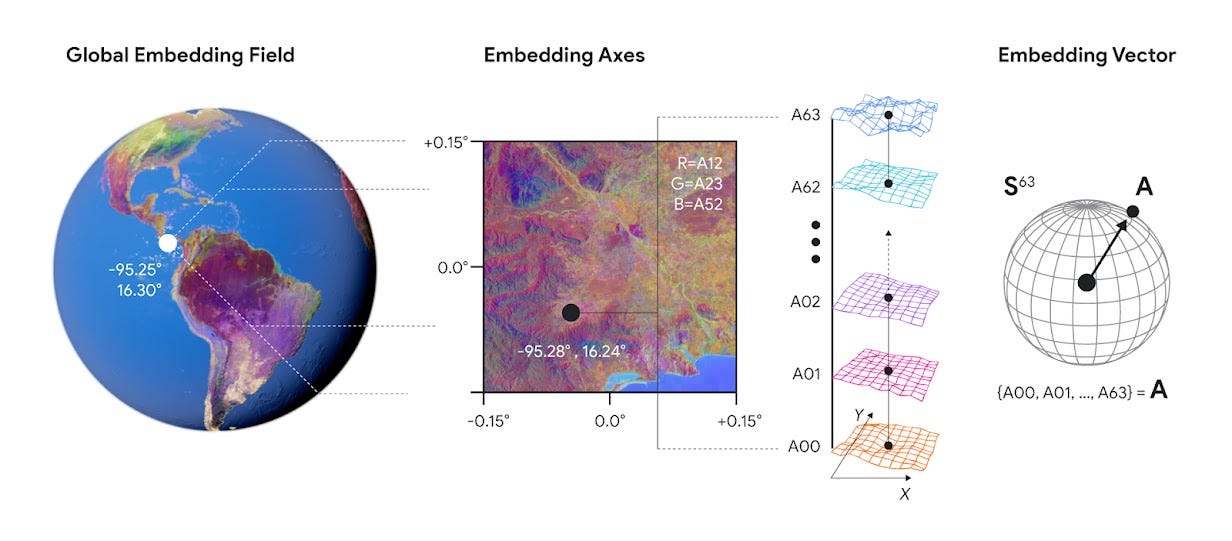

The second component provides the urban-geospatial context. Here, geospatial foundation models and globally consistent embeddings are the frontier. Google Alpha Earth, for example, provides 64-dimensional vectors at 10-meter resolution that summarize annual surface conditions. Merging this with Overture Foundation’s data could lead to a combination of top-down and bottom-up information. This is ideal for clustering, similarity search, and change detection.

For indexing, one of multiple cell hierarchy frameworks supply a practical global tiling scheme. This enables an embedding for every corner in any city, giving us a consistent way to bind inputs to a specific place.

3. The Regulator: The future of governance

The core organizational engine for this paradigm is the Decentralized Autonomous Organization (DAO). DAOs are blockchain contracts that autonomously store and execute rules, using external interfaces (oracles) to sense the environment. This structure allows for a revolutionary, recursive form of local government, where individuals can belong to hundreds of interlocking community organizations at various administrative levels. This enables the implementation of a modern, continuously adapting Pattern Language, where interactive blueprints automatically respond to neighboring developments. To ensure equitable and efficient collective decision-making - the political solvency of the DAO - the system can utilize Quadratic Voting (QV). This method moves beyond a simple binary vote to measure the intensity of people’s preferences in collective decisions. Voters spend “voice credits” which convert to votes by their square root, meaning expressing stronger conviction incurs an escalating cost. This mechanism effectively normalizes the power of the largest stakeholders, greatly mitigating the tyranny-of-the-majority problem and providing a powerful, collaborative framework for localized, adaptive urban growth.

A New Planning Instrument

Linking these components might yield the future of practical urban planning and growth. The Genotype encodes the universal logic. The Phenotype provides the local context. The Regulator channels the political will. A query (e.g., siting a new school) is no longer answered by committee or truncated data, but by traversing auditable causal pathways, conditioned on local signatures, and answered with quantified trade-offs and full citations. This is the shift from planning as a static blueprint to planning as a continuously updated, DNA-based operating system that finally allows the city to learn and adapt at the speed of life.

Did you like this issue of thinkthinkthink? Consider sharing it with your network:

📚 One Book

Antifragile by Nassim Nicholas Taleb

This book introduces the concept of antifragility, defining it as a property of a system that benefits, rather than merely withstands or resists, from stressors, volatility, and random events. Fragile systems break under disorder; robust systems survive it; and antifragile systems improve upon exposure to it. The core argument posits that in a highly uncertain and unpredictable global environment, characterized by “Black Swan” events, optimizing for robustness is insufficient. Instead, policy, economic, and technical structures should be designed to possess an inherent degree of antifragility, enabling them to gain from prediction errors, external shock, and systemic variation.

📝 Three Links

Effective context engineering for AI agents by Anthropic

From prompting to context

Universal Model of Urban Street Networks by Marc Barthelemy & Geoff Boeing

Generating synthetic urban street networks

The Complete Guide to Atomic Note-Taking by Sascha

On an interconnected web of personal notes

🐤 Five Tweets

This was the twenty-sixth issue of thinkthinkthink - a periodic newsletter by Joni Baboci on cities, science and complexity. If you liked it why not subscribe?

Thanks for reading; don’t hesitate to reach out at dbaboci@gmail.com or @dbaboci. Have a question or want to add something to the discussion?

The Urban Knowledge Graph concept paired with LLMs solving the synthesis problem is clever. Forrester and Lowry had the right intuition but lacked the computational tools to ingest vast unstructered datasets. Combining geospatial embeddings with graph databases creates something genuinly new - planing that can query analogous urban contexts and surface trade-offs with full citations. The DAO governance layer is ambitious but makes sense if you want continuous adaptation instead of static master plans.